Current Research Topics

AI for Memory System Simulation

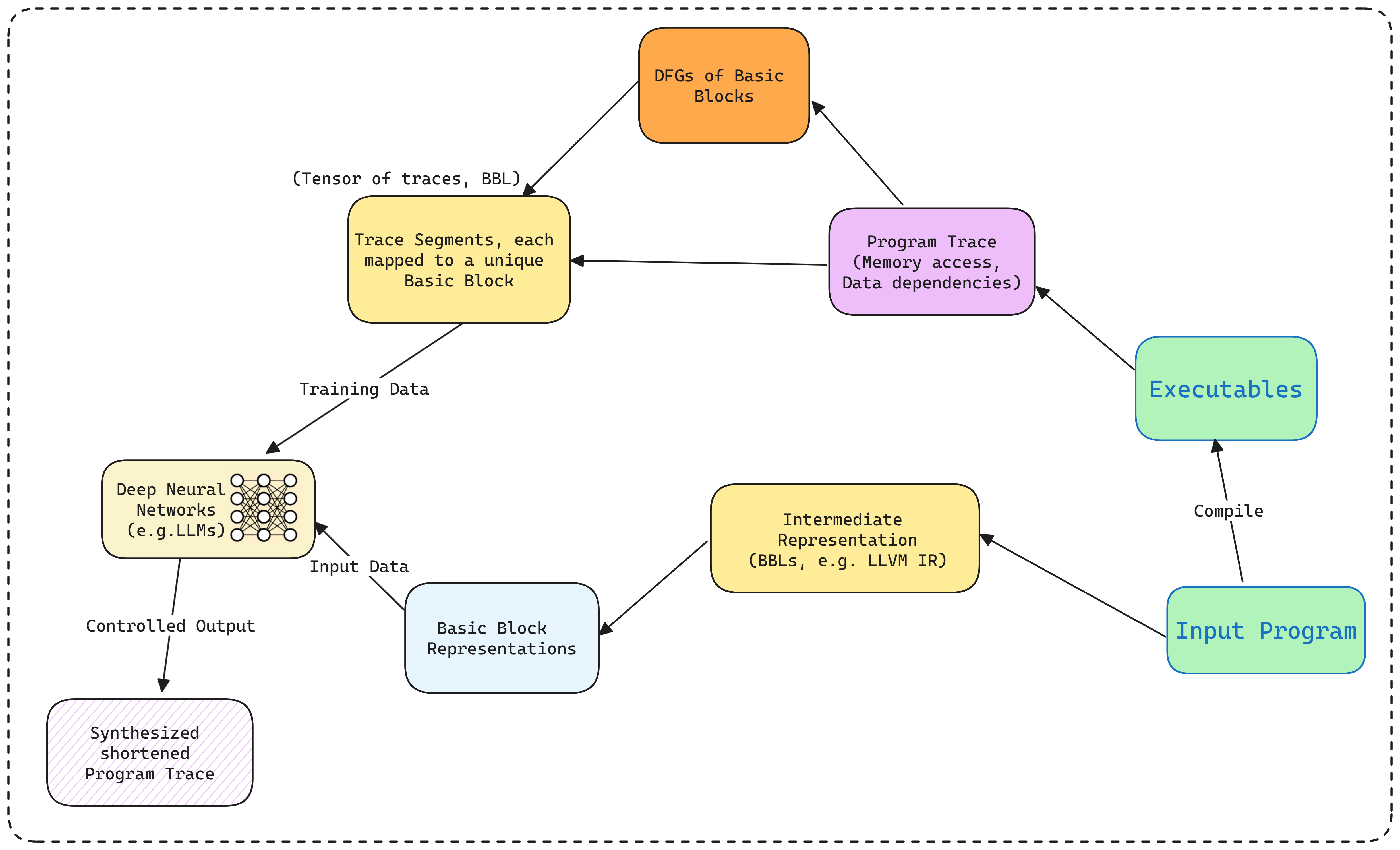

My primary focus lies in applying Generative AI to memory workload synthesis and trace-based simulation. Recently, our paper “Memory Workload Synthesis Using Generative AI” (co-authored with [co-authors, etc.] and accepted at MEMSYS 2023) showcased the first use of large language models in constructing shortened yet representative microbenchmarks from raw program traces. The study compared three learning-based methods (Tab-Base, Tab-RD, Tab-IC) with existing techniques like HRD and STM, demonstrating that AI-based approaches can yield accuracy on par or better—while highlighting the importance of domain insights such as reuse distance.

Notably, this work received the Best Paper Award at MEMSYS 2023. Key findings include:

- High fidelity: Our Tab-RD approach improved L1 miss-prediction accuracy vs. traditional HRD, crucial for microarchitecture prototyping.

- Reasonable overhead: Training a transformer on a single NVIDIA A100 GPU took about 2.5 hours, which once complete can scale effectively.

- Potential for synergy: Domain constraints (e.g., reuse distance thresholds) drastically improved generative realism.

Transformer-Based Trace Reduction

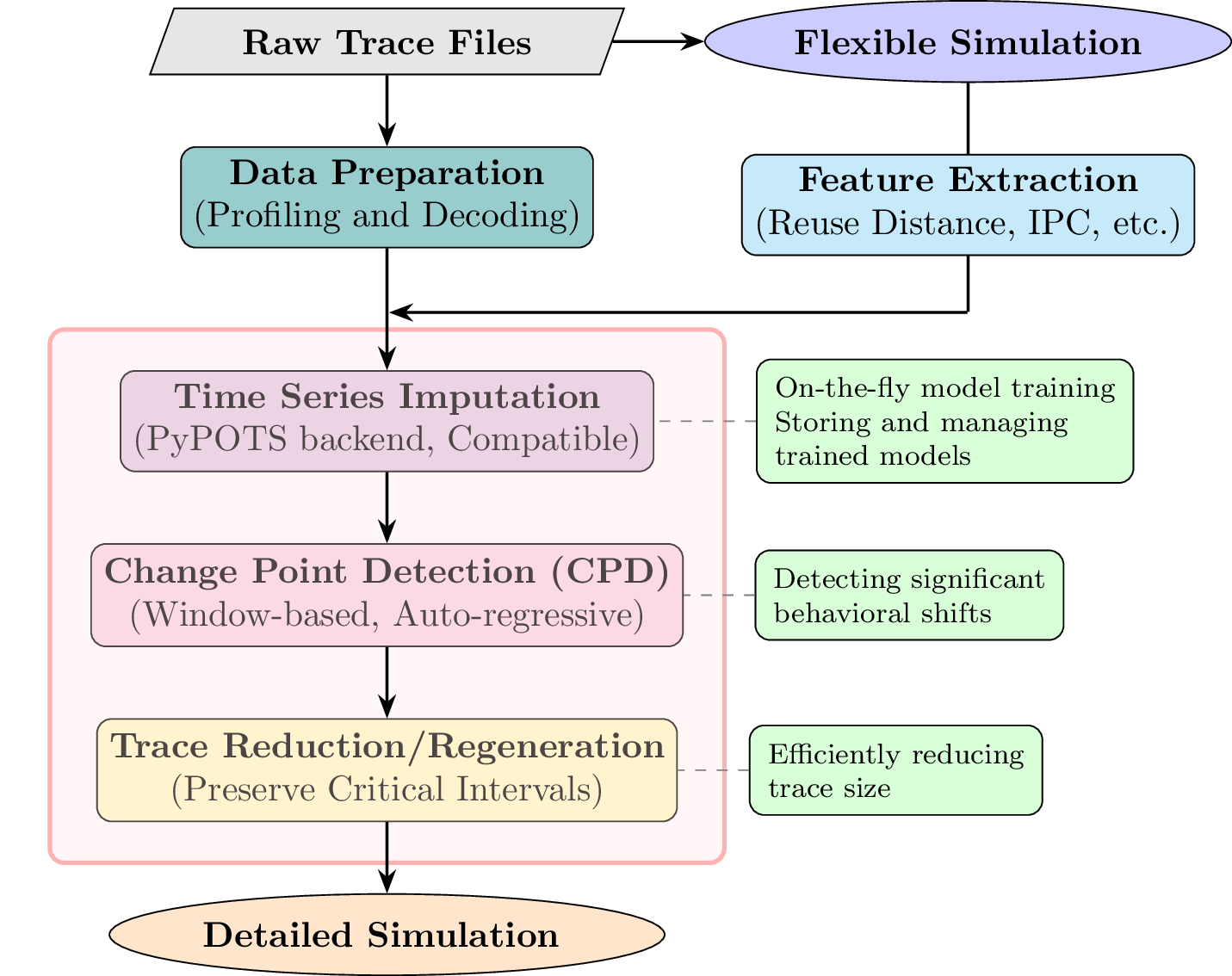

Another upcoming paper, “Efficient Trace Reduction via Transformer-Based IPC Imputation and Change Point Detection”, currently under review, proposes a new technique called VitaBeta. This method uses:

- Multivariate time series imputation to fill in missing IPC values at instruction granularity.

- Adaptive change point detection to preserve instructions around abrupt shifts in program behavior.

- Up to 3.96× speed-up in SimPoint traces with minimal error in cache miss rates and pipeline metrics.

Compared to baseline sampling methods (SimPoint, BarrierPoint, etc.), VitaBeta further reduces redundancies in short-yet-complex HPC workloads. We’re especially excited about its compatibility with standard trace formats and its ability to integrate with widely used simulators like ChampSim.

Interested in these areas (or bridging them)? Feel free to engage with me directly at cshiai@connect.ust.hk. I’m eager to explore new frontiers in HPC, AI, and advanced instrumentation.

Publications

Below are selected publications spanning memory-system design and electron microscopy. For a full list or inquiries, feel free to reach out to me at cshiai@connect.ust.hk.

2023

Memory Workload Synthesis Using Generative AI (Best Paper Award)

- Authors: Chengao SHI, Fan JIANG, Zhenguo LIU, Chen DING, Jiang XU

- Conference: MEMSYS 2023

- Abstract (Brief): Introduces the first use of generative AI for memory workload synthesis. Compares three transformer-based approaches (Tab-Base, Tab-RD, Tab-IC) against classical reuse-distance-based models (HRD, STM). Demonstrates that the right combination of domain insights (reuse distance) and advanced ML can improve simulation fidelity, sometimes exceeding state-of-the-art methods.